Rover's blog

Memory leak in Golang?

December 04, 2017

We already know, that Golang is a blazing fast language with one of the smoothest learning curve ever. Despite being easy to learn, there are issues which are not trivial to debug in the language and I feel like memory issues are one of them.

The language utilizes automated garbage collection, so there should be no memory issues then right? Well let’s take a look at a real life example that we encountered with my team.

Figuring out that something stinks

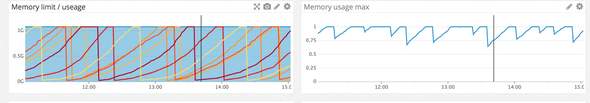

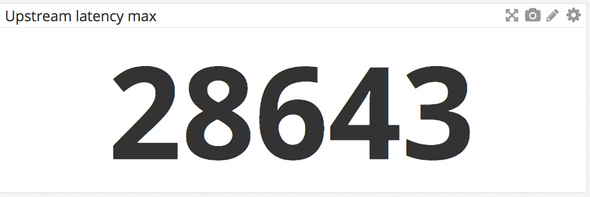

So we’ve spent quite some time in production since our product was released, but our memory issues got visible for us only when users actually started to use the application. When we reached 10k requests per minutes, it became unbearable. Our production environment is a Kubernetes cluster with 6 monolith nodes and a bunch of micro-services. It took about 40 minutes for a node to go down because of the 1 GB memory limit we set before to be a healthy margin.

As you can see if a node reached the limit, Kubernetes just killed and restarted it.

What does it cause ?

If there’s no memory left to allocate you are going to experience things like:

- Increased response time (And I mean REALLY increased times)

- CPU usage is sky rocketing

- Ungracefully terminated processes (Because of the forced restarts)

- Increased hardware requirements

- Consistent alarming from the monitoring system

If your application dies, it should be because of the number of requests you receive, and not because of an unfortunate memory leak. You could carry the load from the hardware side for a while, but these kinds of issues need immediate fixes.

CPU Graphs showing the moment of fail

As you can see we almost went up to half a minute sometimes. Just imagine yourself sitting in front of an application for half a minute doing absolutely nothing.

Putting out fire (Or how you should start debugging)

If you are trying to search the internet regarding memory issues of Golang, you are definitely going to find articles related to pprof.

pprof is for profiling your applications. There are a lot of in-depth guides on the internet about how this tool works, therefore I’m not going to write about this, but more about how we used it and what we saw.

First things first, we had to implement a little bit of code which is using the pprof package. Since we are always concerned about the security of our applications, we implemented it in the following way:

if config.Settings.Debug {

go func() {

debugR := mux.NewRouter()

debugR.HandleFunc("/debug/pprof/", pprof.Index)

debugR.HandleFunc("/debug/pprof/cmdline", pprof.Cmdline)

debugR.HandleFunc("/debug/pprof/profile", pprof.Profile)

debugR.HandleFunc("/debug/pprof/symbol", pprof.Symbol)

debugR.Handle("/debug/pprof/heap", pprof.Handler("heap"))

debugR.Handle("/debug/pprof/goroutine", pprof.Handler("goroutine"))

debugR.Handle("/debug/pprof/block", pprof.Handler("block"))

debugR.Handle("/debug/pprof/threadcreate", pprof.Handler("threadcreate"))

err = http.ListenAndServe(config.Settings.DebugListenAddress, debugR)

if err != nil {

log.WithError(err).Errorln(err.Error())

}

}()

}

As you can see, we are starting a new http server listening on a different port than our application. We do that using a new Goroutine, so it is able to work separately from the original application. This is needed due to the fact that we didn’t want to expose these endpoints for public use.

It is a general principle to never expose anything debug related in a production environment(especially not things like memory profiles). This way we can make sure that non-authenticated people won’t be able to receive any data from the pprof endpoints.

One more little touch, we wanted this whole debug feature to be able to be turned off. We made a new debug variable in our configuration settings, so despite having this feature in production, we could turn it off any time we wanted to.

The practical part

Code was ready, we deployed it to our dev environment. We wanted to wait until the memory is almost full and take a snapshot about the heap with the pprof endpoint to get the clearest picture possible about our memory leak.

The thing is that our dev environment received waaaay less traffic than our prod environment. Do not get me wrong, it still leaked, but it took almost a day for a node to go down. (Fun fact: our dev had only ~0.006% traffic compared to our prod)

We could wait until the right moment, or create some direct traffic for the endpoints, however, we felt confident enough to deploy it to our prod environment since these endpoints could be turned off, and they were only reachable from internal network.

After it was deployed, we just waited for the notification that a node has reached 90% memory. Steps needed to be executed:

- SSH into the given node container

- wget pprof endpoints from localhost, put the files into temp directory

- Exit from container

- Copy files including the binary from remote

- Run profiling

How profiling looks like

After we got all the files we needed (from the endpoints + the binary) we started to investigate. Our main focus was to take a look at the goroutines to see if they are stuck and after that examine the heap. This starts with a very easy step:

go tool pprof ./backend ./goroutine

(Notice here that the target is called goroutine, that’s because it is the result of the endpoint /debug/pprof/goroutine)

This is going to be the interactive mode of the tool. Here you can type help for all the commands, however, we are going to take a look to the result of the top command, which looked like this:

Showing top 10 nodes out of 48 (cum >= 1)

flat flat% sum% cum cum%

56 98.25% 98.25% 56 98.25% runtime.gopark

1 1.75% 100% 1 1.75% runtime/pprof.writeRuntimeProfile

0 0% 100% 47 82.46% bufio.(*Reader).Peek

0 0% 100% 1 1.75% bufio.(*Reader).Read

0 0% 100% 47 82.46% bufio.(*Reader).fill

0 0% 100% 1 1.75% database/sql.(*DB).connectionCleaner

0 0% 100% 1 1.75% database/sql.(*DB).connectionOpenerAs you can see the application goroutines spend most of their time with runtime.gopark which is fine, because it’s the goroutine scheduler. So this is kinda what we expected, we have no problem here.

The heap

Take a look at the main suspect, the heap:

go tool pprof ./backend ./heap

Showing top 10 nodes out of 69 (cum >= 669.43kB)

flat flat% sum% cum cum%

12299.37kB 32.67% 32.67% 12299.37kB 32.67% runtime.makemap

5632.21kB 14.96% 47.63% 5632.21kB 14.96% runtime.rawstringtmp

4097kB 10.88% 58.51% 27149.39kB 72.11% net/http.readRequest

3585.02kB 9.52% 68.03% 4254.44kB 11.30% runtime.mapassign

2560.31kB 6.80% 74.84% 2560.31kB 6.80% net/url.parse

2560.19kB 6.80% 81.64% 2560.19kB 6.80% context.WithCancel

2048.33kB 5.44% 87.08% 18956.01kB 50.35% net/textproto.(*Reader).ReadMIMEHeader

1043.17kB 2.77% 89.85% 1043.17kB 2.77% regexp.(*bitState).reset

1024.12kB 2.72% 92.57% 1024.12kB 2.72% regexp.progMachine

669.43kB 1.78% 94.35% 669.43kB 1.78% runtime.hashGrowHere, if you are previously installed graphviz (brew install graphviz) you can generate a pretty awesome svg which shows you how the application’s heap looks like with the web command. (You can use it to generate svg for your Goroutines as well, which are usually pretty interesting.)

What we see here is how many actual memory is being used. Golang works in a way, that it’s allocating memory sometimes, just to speed up future allocations based on its predictions. That means, if your application tends to use a lot of memory, then even if it loses all references for a given memory segment, the garbage collector won’t necessarily pick it up instantly, therefore it will remain as “allocated” for sometime.

As you can see, runtime.makemap and runtime.mapassign are both directly related to creating maps. (Others are related to maps indirectly as well, which is also a clue) Not doing anything fancy regarding regular expressions, our attention shifted from third party libs to our code again.

Caching?

What are maps good for? Quick access of data, unique keys for every value, and caching of course. It can be intentional or unintentional caching. After taking another look at our code from this perspective, we didn’t find caching, nor storing global map objects without ever clearing them.

This meant that somehow one of our third party packages was caching data without us knowing about it. With that in mind, it was easy to suspect our url router in the first place.

Gorilla mux

We use Gorilla Mux and it has worked for us so far. So where can Gorilla cache? There are a couple of options where you can set caching and storing with Gorilla. You could set it directly in the Router:

type Router struct {

...

// If true, do not clear the request context after handling the request.

// This has no effect when go1.7+ is used, since the context is stored

// on the request itself.

KeepContext bool

...

}

Since we used Go1.8 at that time, this one was fine.

You can set caching in the Gorilla context:

// Clear removes all values stored for a given request.

//

// This is usually called by a handler wrapper to clean up request

// variables at the end of a request lifetime. See ClearHandler().

func Clear(r *http.Request) {

mutex.Lock()

clear(r)

mutex.Unlock()

}

// clear is Clear without the lock.

func clear(r *http.Request) {

delete(data, r)

delete(datat, r)

}

...

// ClearHandler wraps an http.Handler and clears request values at the end

// of a request lifetime.

func ClearHandler(h http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

defer Clear(r)

h.ServeHTTP(w, r)

})

So we can see 2 things here. The first one is that if we would like to clean our stored request contexts, we had to wrap the request into ClearHandler function

The second thing is that Clear itself uses a global mutex to clear the data cached by the Gorilla context. What that means is that this function is a blocking function regarding incoming http requests.

Both of the functions use delete which is a built-in function for deleting maps. Despite using it, we experienced that garbage collector still wasn’t able to collect the data which were allocated here.

When you start to learn Go, probably one of the first things you learn, is that you don’t have to free memory manually because of the garbage collector. If you still decide to do it, you can write code for it which is not recommended, or you can use the fact that GC frees up memory, when you have no more reference to a given memory segment.

So after digging more down into the source code of Gorilla, it turned out that this was not the first application producing these kinds of issues while using Gorilla. Therefore the developers being aware of this situation implemented the Purge function, which sounds much cooler than it actually is.

// Purge removes request data stored for longer than maxAge, in seconds.

// It returns the amount of requests removed.

//

// If maxAge <= 0, all request data is removed.

//

// This is only used for sanity check: in case context cleaning was not

// properly set some request data can be kept forever, consuming an increasing

// amount of memory. In case this is detected, Purge() must be called

// periodically until the problem is fixed.

func Purge(maxAge int) int {

mutex.Lock()

count := 0

if maxAge <= 0 {

count = len(data)

data = make(map[*http.Request]map[interface{}]interface{})

datat = make(map[*http.Request]int64)

} else {

min := time.Now().Unix() - int64(maxAge)

for r := range data {

if datat[r] < min {

clear(r)

count++

}

}

}

mutex.Unlock()

return count

}

As you can see what happens here, is that as a parameter it gets a maxAge value, which defines the maximum lifetime of the context we store. If we set it to zero or below, it will always create new maps for the requests. This means that the original contexts stored in data and datat are going to lose every reference in the application so the garbage collector is going to pick it up. However, if we set it to a value above zero, it’s going to check every request context if their lifetime is above that or not. It blocks every request while it runs so in an optimal situation you don’t want to call it too often.

In the worst case scenario we delete contexts which are still being used by the application, therefore creating a lot of serious issues. Yay?

The optional time for a request context to sit in the memory for us at that time, was about half a minute max. So we thought let’s make it a double, set the maxAge to a minute and we are good to go.

We could see the immediate change in our monitoring system.

Memory? Back to normal.

CPU Usage? Never been more optimal.

Summary

If you are trying to find a memory issue in any application, you always have to start with the code you have written. However, if the application is a little bit bigger, and has been running in production for a while, well, you might have to dig deeper.

Golang’s memory profiling has never felt more easier and the documentation about it is getting better every year. After seeing the power it brings to the table, when it comes to debugging memory issues, I have to say that this is one of the better solutions out there right now.

Experiencing a serious bug like this in a production environment is really nerve-racking, but after figuring out what is wrong, you can finally find the official issue about it.

Written by Imre Racz, tech enthusiast. Check LinkedIn